How We Build Automation Processes From Scratch

Our product team has always wanted to deliver features and fixes as quickly and as often as possible. The project is a SaaS practice management platform for mental health clinics.

Initially, there were releases twice a week, then once a week, now once every two weeks. It took a long time to conduct smoke tests — 8-10 hours for one QA engineer for each release. The product is large and has many features and versions. And it continues to grow. Eventually, it was clear that we needed to start test automation.

Setting up automation

The open-source Protactor framework and TypeScript programming language were chosen for automation. For test reporting, we chose the Allure Framework.

Each release is tested on a separate Docker container on a remote server. Tests are run for each new build (version) of the application, which will be released in production in the future.

The first auto-tests were the simplest for the existing functionality and were written not by test cases but at the discretion of the QA-automation engineer as there were no manual test cases designed.

Most likely, this was the first mistake in our process. It was necessary to initially create a database of manual tests for automation. We fixed this problem later.

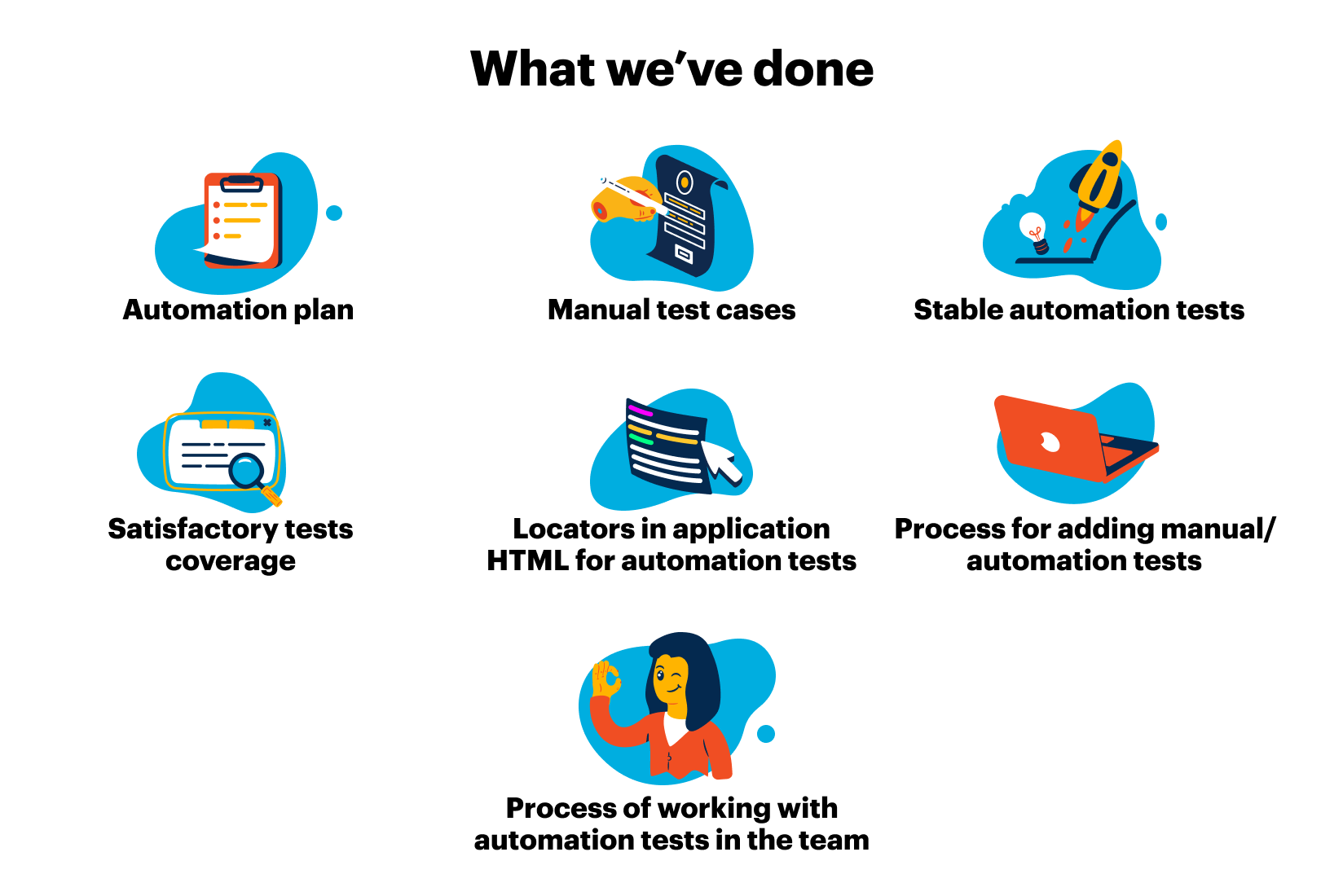

We made the first automation plan — the minimum smoke test for the project. The application was divided into semantic parts, and then we began to write manual tests and automate them. There was no great benefit from auto-tests at that time. The tests were unstable. And this problem began to be solved.

Special locators for auto-tests have been added to the HTML pages of the application. And today, each test release passes a code review from the developers, and each test release is tested separately by the QA-automation engineer before release. We filled the Confluence Space with the necessary documentation.

Over time, we faced the problem that auto-tests take a very long time. This problem was solved by running tests in parallel (as if not one person is testing, but several), and this helped us reduce the time by almost three times.

After all, processes were established within the team so that everyone in the team had the same understanding and working process with auto-tests. The QA engineer:

- runs auto-tests for each assigned task;

- analyzes the results;

- reports defects if bugs were found by auto-tests;

- continues the manual testing of the task.

At that moment, the smoke is ready for the project, and we are expanding the database of manual and auto-tests for regression. We already have 423 auto-tests performed in about 3 hours. But the initial efforts for passing 265 manual test cases took 8-10 hours of one QA engineer. For now, auto-tests have detected about 24 defects, and that’s just the beginning.

We cover new features with auto-tests, develop auto-tests for updating large libraries in the project, and of course, tests for the most rarely changed parts of the application. Today auto-tests help us find bugs that are not obvious at first glance and be sure that the application is working as expected.